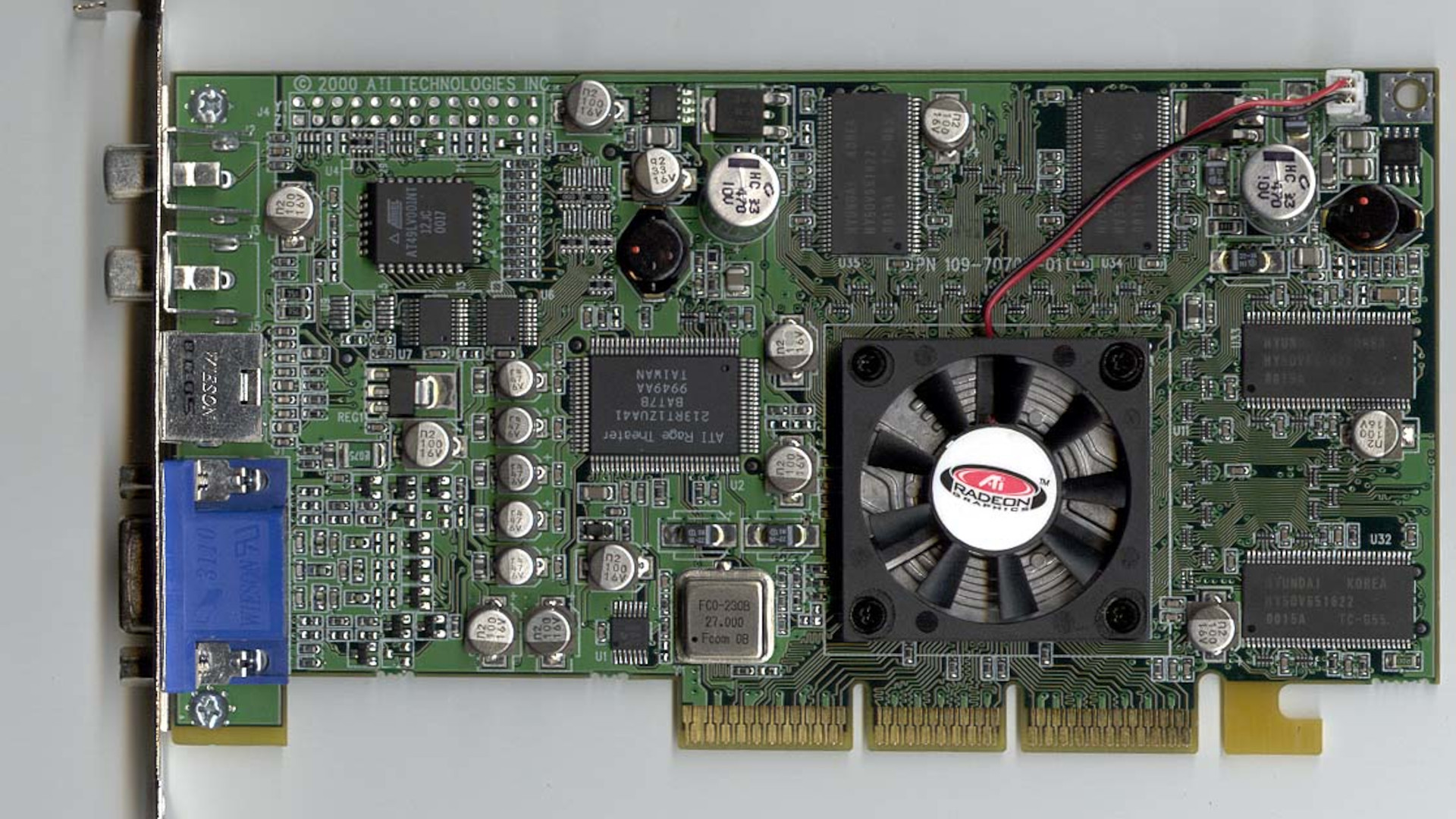

It only seems like yesterday when I got my hands on ATI Technologies' first and much-anticipated Radeon graphics card but time has a funny way of rummy 51 playing tricks on your memory, and it was 25 years ago—to this very week—as I managed to easily pick one up at launch (how times have changed). The joined my reasonably sized collection of graphics cards; on paper, it was the best of them all.

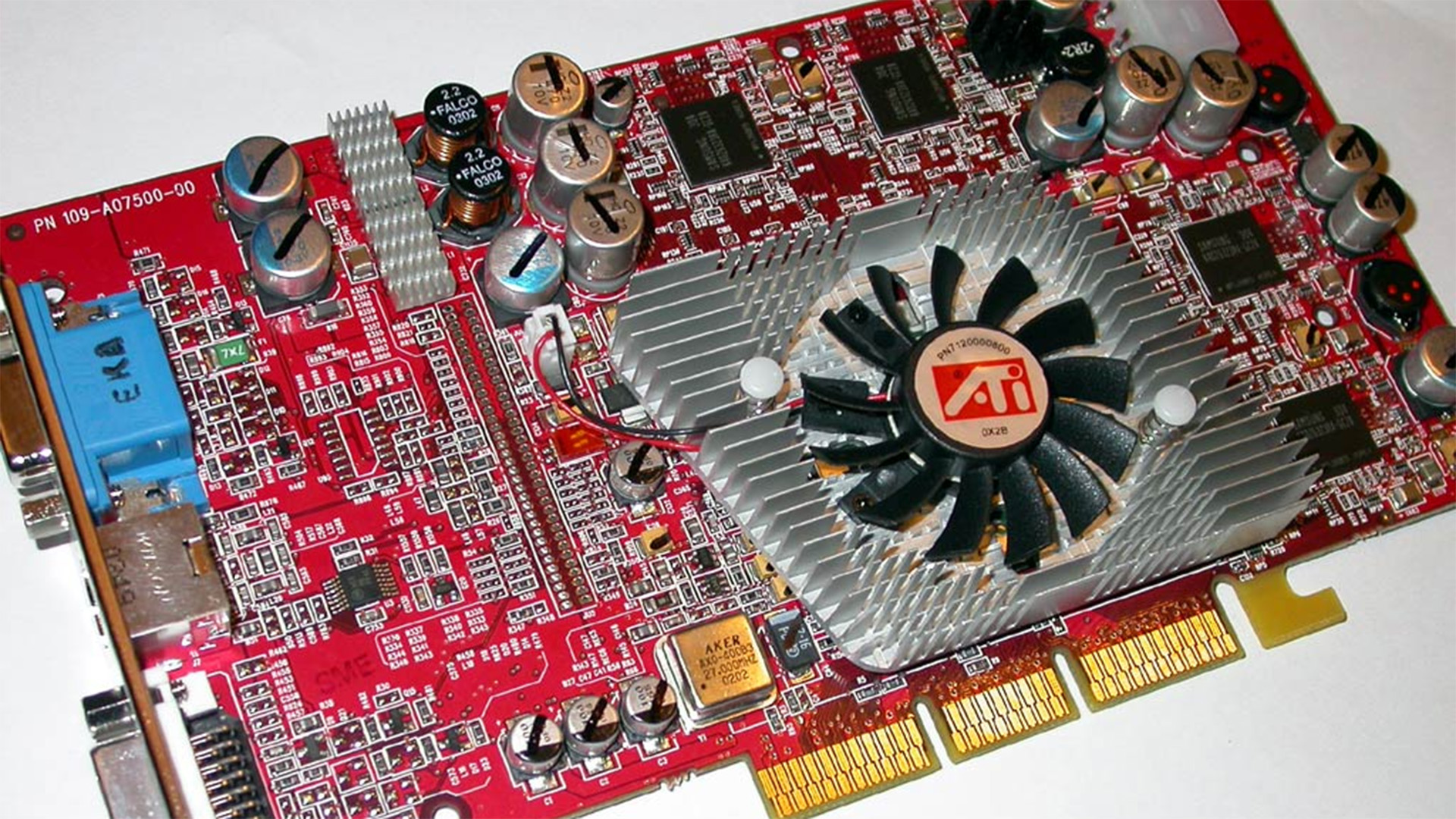

But what transpired over the following months not only put me off Radeon cards for a good while, until the 9700 appeared in 2002, but it also set the tone as to how ATI and eventually AMD would fare against Nvidia in the graphics card market.

But let's start with why I was so looking forward to the first Radeon. At that time, I had around 12 graphics cards in a number of PCs—most of which were used for testing. I can't remember everything I had but I do recall an Nvidia and a , a Matrox , and a 3dfx .

Just a few weeks later, Nvidia launched its NV15 processor and within a few days, a GeForce2 GTS arrived on my doorstep. Essentially a pumped-up GeForce 256, the new Nvidia chip sported a much higher clock speed than its predecessor, along with twin texture units in each pixel pipeline. But that was it and the Radeon DDR still looked to be the better product—more features, better tech—even though it offered less peak performance.

Coding legend : "The Radeon has the best feature set available, with several advantages over GeForce…On paper, it is better than GeForce in almost every way." When it came to actually using the two cards in games, though, the raw grunt of the of the Radeon DDR, except in high-resolution 32-bit colour gaming.

That's partly because no game was using the extra features sported by the Radeon but it was mostly because the GeForce2's four pixel pipelines with dual texture units (aka TMUs) were better suited for the type of rendering used back then. ATI's triple TMU approach was ideal for any game that did lots of rendering passes to generate the graphics but none were doing this, and it would be years before games did. By then, of course, there would be a newer and faster GPU on the market.

Something else that Nvidia did better than ATI was driver support, specifically stable drivers. I spent more time dealing with crashes, rendering bugs, twitchy behaviour when playing in 16-bit mode, and so on than I ever did with any of my Nvidia cards. The Radeon DDR was also my first introduction to the world of 'coil whine' as that particular card squealed and squeaked constantly. I spent many weeks conducting back-and-forth tests with ATI to try and narrow down the source of the issue but to no avail.

Drivers would continue to be ATI's bugbears for years to come, even after AMD acquired them in 2006. By then, the brief glory of the and (and the abject misery of the ) was gone, despite the likes of the and . Nvidia's graphics cards weren't necessarily any faster or sported any better features, but the drivers were considerably better, and that made the difference in games.

Over the years, AMD would try again with all kinds of 'futureproof' GPU designs, such as the compute-focused design of the and its on-package High Bandwidth Memory (HBM) chips. Where Team Red always seemed to be looking ahead, Nvidia was focused on the here and now, and it wasn't until 2019 that AMD finally stopped trying to over-engineer its GPU designs and stuck to making them good at gaming, in the form of RDNA and the .

But even now, AMD just can't seem to help itself. Take the chiplet approach of the last-gen —it didn't make a jot of difference to AMD's profit margins or its discrete GPU market share, or even make the graphic cards any faster or cheaper. With the recent RX 9070 lineup, though, Team Red has finally produced a graphics card that PC gamers need now. Even its driver team is on the ball and I can't recall when I last said that about AMD.

: The top chips from Intel and AMD.

: The right boards.

: Your perfect pixel-pusher awaits.

: Get into the game ahead of the rest.

Arguably, Nvidia seems to have gone down an old Team Red road of late, what with and graphics cards that are barely any faster than the previous generation but are replete with features for the future (e.g. ). But when you effectively control the GPU market, you can afford to have a few hiccups; AMD, on the other hand, has to play it safe.

I just wish it had done so years ago when it was battling toe-to-toe with Nvidia at retailers. The is the best rummy best card that it's released for a long time and although its price is all over the place at the moment, the fundamental product is really good (and far better than I expected it to be). Just imagine how different things would be today if rummy golds AMD had used the same mindset behind the design of the 9070 for everything between the X1800-series and the first RDNA card.

Will Radeon still be a household name in another 25 years? I might not be around to see if it comes to pass but I wouldn't bet against it, as despite all its trials and tribulations, it's still here and still going strong.